In this blog, we describe how you can build your own Dagster IO managers and use AWS Redshift as an example. We elaborate on the fundamentals on IO managers, and the tips and tricks we’ve learned building different IO managers over time.

A short introduction on IO managers #

From the IO manager documentation of Dagster:

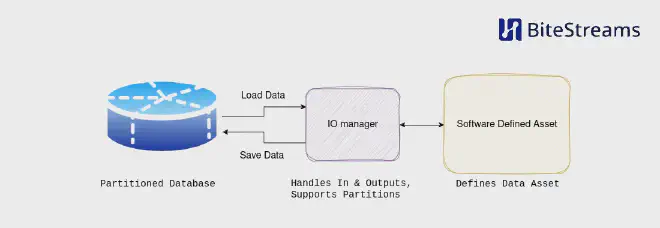

I/O managers are user-provided objects that store asset and op outputs and load them as inputs to downstream assets and ops. They can be a powerful tool that reduces boilerplate code and easily changes where your data is stored.

IO managers, combined with partitions, are one of the most powerful concepts in Dagster; in essence, they allow you to avoid re-writing code to load and retrieve data from different sources, often your database of choice. This is immensely powerful once you realize that much of your code is just reading and writing to databases, file storage, etc. The interesting part of your code is the transformations you perform on the data; loading and retrieving data is necessary but does not add much value. Thus, when the IO manager is a great fit for our problem, we should consider using one.

When not to use an IO Manager #

When all you have is a hammer, everything looks like a nail.

Every programmer has encountered this, a good example being the RDBMS. As with any abstraction, it works great until it doesn’t. The dagster IO manager is just one tool in your toolbox, and when it doesn’t fit the problem at hand, you shouldn’t use it.

The IO manager has several assumptions that make it great for loading data into a function, doing something with it, and then storing some data. However, if this is not the abstract pattern of your problem, the IO manager might not be of much help.

For instance, if your workflow involves complex data transformations that don’t neatly fit into the input-process-output model supported by the IO manager, trying to force it into that framework could lead to convoluted and inefficient code. In such cases, it’s better to step back and evaluate whether using the IO manager aligns with the natural flow of your problem or if a different approach might be more suitable.

Ultimately, choosing the right tool for the job involves understanding the problem domain, considering the specific requirements and constraints, and being willing to adapt and explore alternative solutions when necessary. The dagster IO manager is a valuable tool in your toolbox, but it’s important to recognize when it’s the right tool for the task at hand and when another approach might be more appropriate.

Best Practices and Tips When Developing IO Managers #

Before we dive into the implementation details of an IO manager, lets first highlight some best practices and tips for developing and utilizing IO managers effectively. In this section we’ve tried to note the most important points to consider.

1. Error Handling and Resilience #

Implement robust error handling mechanisms within your IO manager to gracefully handle failures and recover from errors. This includes handling network issues, authentication errors, and data inconsistencies to ensure the reliability of your data pipelines.

2. Automated Schema Creation #

Automate schema creation within your IO manager where possible. This streamlines data storage, reduces errors, and ensures consistency. Dynamic schema generation based on input data or templates enhances scalability and adaptability, optimizing Dagster pipeline efficiency. Also consider what happens if the schema in the database doesn’t match the schema of the data input, throwing an error in this instance can be a good idea.

3. Ensure Idempotency #

Implementing idempotence using Dagster partitioning adds an extra layer of reliability to your data operations. By partitioning your data into distinct segments based on certain criteria (like time intervals or data sources), you create a structured approach to handling retries and failures. Each partition represents a self-contained unit of work, making it easier to track and manage data processing tasks. If something goes wrong during processing, you can retry individual partitions without affecting others, ensuring consistent results across your pipeline. Leveraging Dagster partitioning in conjunction with idempotent logic reinforces the integrity of your data operations, minimizing the risk of errors and inconsistencies. This is pretty much a must-have.

4. Performance Optimization #

Optimize the performance of your IO manager by minimizing latency and maximizing throughput. This involves optimizing data transfer mechanisms, leveraging caching strategies, and parallelizing data operations where possible to improve overall pipeline efficiency. Concrete strategies are batching your operations, doing work concurrently or in parallel, and caching heavy operations.

5. Reusability and Modularity #

Design your custom IO manager with reusability and modularity in mind. Consider abstracting common functionality into reusable components or libraries to facilitate easier integration across multiple pipelines and projects.

Having laid the groundwork for what an IO manager should look like, let’s now look at a real-world example of how to set up an IO manager.

Implementing a Redshift IO Manager in Dagster: A Step-by-Step Guide #

In this guide, we’ll implement a custom IO manager for Redshift, Amazon Web Services’ (AWS) data warehousing solution. We will focus on storing data from Redshift clusters within Dagster pipelines. The custom IO manager we’ll develop will handle schema management, data saving, and partition management, providing a comprehensive solution for interacting with Redshift databases within Dagster workflows. The in and output types of this IO manager are pandas, one of the most used libraries. For better performance, we would recommend using other libraries like pyarrow or polars.

Introduction to the Redshift IO Manager #

Our Redshift IO manager will bridge the gap between Dagster pipelines and a Redshift cluster, facilitating the movement of data between these environments. This custom IO manager will leverage AWS services such as S3, which allows us to store data as efficiently as possible.

The key functionalities of our Redshift IO manager include:

- Initialization and Configuration: Setting up AWS credentials, Redshift cluster details, S3 bucket configurations, and initializing the IO manager.

- Schema Management: Automatically managing database schemas and table structures in Redshift based on the data being processed.

- Saving Data: We efficiently store data from Pandas DataFrames into Redshift tables, ensuring data integrity and consistency. If the data already exists, we need to remove and replace the old dataset.

- Loading Data: Retrieving data from Redshift tables into Pandas DataFrames, enabling seamless integration with downstream processing. We have decided to leave this out of this guide.

1. Initialization and Configuration #

To initialize our RedshiftPandasIOManager, we have the following definition:

|

|

To set up the Redshift Pandas IO manager, provide AWS credentials, Redshift cluster details, and S3 bucket configurations. Initialize the IO manager as follows:

|

|

When it’s given as the io_manager in the resources dictionary of your Dagster Definitions object, it will be used for all of your assets by default.

2. Schema Management #

Schema management is critical for maintaining alignment between Redshift databases and the data being processed. Our custom Redshift IO manager automates schema creation and table structure definition to streamline database interactions.

Our schema management functionality comprises:

- Schema Creation: Dynamically creating schemas and tables in Redshift to accommodate incoming data.

- Table Structure Definition: Deriving Redshift table structure from the schema of the input data.

Let’s explore the implementation within RedshiftPandasIOManager:

create_schemasMethod: This method establishes a connection to Redshift and executes SQL queries to create schemas and tables. The table structure is defined based on the column types extracted from the input data.

|

|

_get_table_structureFunction: This helper function analyzes the structure of the input data and returns a dictionary mapping column names to their respective data types.

|

|

Automating schema management ensures database readiness for data ingestion, making sure the input data is compatible with the database schema.

Next, we’ll delve into efficiently clearing and saving data from Pandas DataFrames into Redshift tables.

3. Saving Data #

The main functionality of our custom Redshift IO manager is efficiently storing data from Pandas DataFrames into Redshift tables. This process ensures that data is ingested into Redshift with integrity and consistency, ready for analysis and further processing.

The saving of data functionality involves several key steps:

- Data Preparation: Before saving data to Redshift, we prepare the data by converting it into a suitable format. In our implementation, we convert the Pandas DataFrame into a compressed CSV file stored in Amazon S3.

- Data Removal and Loading: Once the data is prepared, we handle the removal of existing data and then load the new data into Redshift using the COPY command. This command efficiently copies data from Amazon S3 into Redshift tables, leveraging parallel processing for optimal performance.

- Partition Management: Optionally, we handle partitioning of data within Redshift tables. This allows for efficient loading and storing of large datasets with Dagster partition keys by organizing data into logical partitions based on a specified partition key.

Let’s explore the implementation of the saving data functionality within our RedshiftPandasIOManager class:

handle_outputMethod: This method is responsible for handling the output data and initiating the process of saving it into Redshift. Inside this method:- We first check if the output data is empty.

- We construct the full table name in Redshift based on the schema and table name extracted from the output context.

- If the output context specifies partition information, we retrieve the partition key and partition expression.

- We upload the output data to Amazon S3 using the

upload_data_to_s3method. - We retrieve the structure of the output data using the

_get_table_structurefunction. - We create or update the schema and table structure in Redshift using the

create_schemasmethod. - Finally, we save the data into Redshift using the

save_datamethod.

save_dataMethod: This method executes SQL queries to clear existing data (if applicable) and copy the new data from Amazon S3 into the Redshift table. It handles both full table refreshes and partition updates. Here’s how the method works:

|

|

In this method, we handle removing existing data before loading new data. This ensures the Redshift table is updated with the latest information, maintaining data integrity.

upload_data_to_s3Method: This method uploads the output data in CSV (gzipped) format to Amazon S3, preparing it for loading into Redshift.

|

|

By implementing efficient data-saving mechanisms, our Redshift IO manager ensures that data is seamlessly integrated into Redshift for analysis and processing within Dagster pipelines.

Potential Improvements #

To keep the scope of this blog somewhat simple, we have not included all the bells and whistles you might want to have. Potential improvements to the IO manager could be the following:

- Dagster Key ranges are currently not supported. This wouldn’t be very difficult to implement.

- It uses Pandas Dataframes, which is not the fastest and is not always an ideal library for data engineering because it performs dynamic type conversions. Polars or Pyarrow could significantly speed up the loading and writing of tables.

- The data is now uploaded using CSV format; a better format would be Parquet tables.

- Large batches of data might cause the system to go out of memory (OOM); this could be fixed using iterators and batched files. Similarly Redshift can give errors if you upload to large CSV files with the

COPYcommand, splitting these files up into circa 100mb size speeds up loading of the data and prevents Redshift giving an error. - Data loading is not yet implemented; it could be implemented similarly to how the data is currently stored.

The Code #

The full code for the IO manager can be found here: Dagster Redshift IO manager

Conclusion #

In this blog, we’ve explored the fundamentals of Dagster IO managers and delved into the process of building a custom IO manager for AWS Redshift. Through an in-depth analysis of each step, from schema management to data saving, we’ve provided insights into best practices and tips for developing robust and efficient IO managers.

Looking ahead, there are numerous opportunities for further enhancements and optimizations to our Redshift IO manager, such as supporting key ranges, optimizing data formats and loading strategies, and improving performance and scalability.

In conclusion, Dagster IO managers are a powerful tool for simplifying data pipeline development and management, offering flexibility, modularity, and reliability. By understanding the core principles and best practices outlined in this blog, data engineers have a robust foundation to develop their own IO managers.